Top AI Crypto Projects for 2026: The Ultimate Guide to AI Altcoins

Quick summary

AI and crypto intersect where compute, data, and trust meet: networks that sell secure compute and verifiable data, decentralized model marketplaces, and tokenized incentives for AI agents are the ones to watch. In 2026, projects that show real integration with cloud providers, audited on-chain compute markets, and steady developer adoption stand out. Below I highlight the practical winners and explain how to evaluate them.

Why AI + Crypto matters now

AI needs three things at scale: cheap, verifiable compute; high-quality, permissioned data; and aligned economic incentives for models and datasets. Crypto contributes programmable money, immutable provenance, and permissioned marketplaces. Projects that stitch these pieces together reduce counterparty friction for enterprises that want on-chain assurance while relying on off-chain compute. Recent platform announcements and infra reports show the sector moving from prototypes to production.

How I choose projects to watch

Pick projects with (1) verifiable technical milestones, (2) institutional integrations (cloud or regulated custodians), and (3) transparent economics (token supply, staking, revenue share). Look for audited smart contracts, published benchmarks, and sustained developer commits rather than launchpad hype.

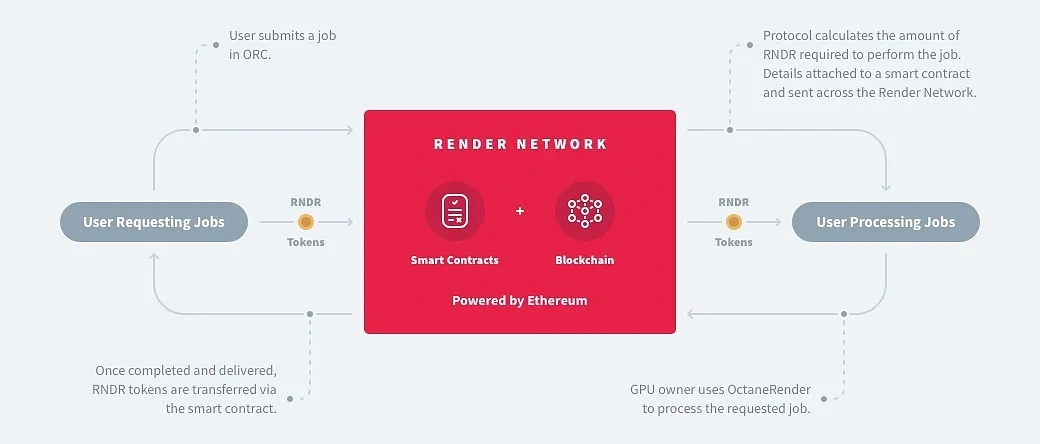

Render Network — decentralized GPU rendering and inferencing

Render is a distributed GPU marketplace that matches render jobs and AI workloads to rented GPU capacity. In 2025–26 Render broadened its focus from graphics rendering to model inferencing and hosted incremental support for major renderers, and its monthly reports show steady protocol-level integrations with Redshift and Maxon’s Redshift toolchain. That evolution makes RNDR a pragmatic play for AI workloads that can be parallelized across many nodes.

Why it matters: Render offers a market for GPU cycles where participants monetize idle GPUs; for AI this means more diverse and geographically distributed compute with economic incentives for providers. If Render proves low-latency, reliable pricing and robust node reputation, it becomes a practical layer for commercial inference tasks outside hyperscaler clouds.

What to look for next

• Proof-of-service benchmarks demonstrating latency and throughput for common LLM inference.

• Enterprise SLAs or partnerships with rendering studios or cloud providers.

• Token economics that reward long-term node reliability.

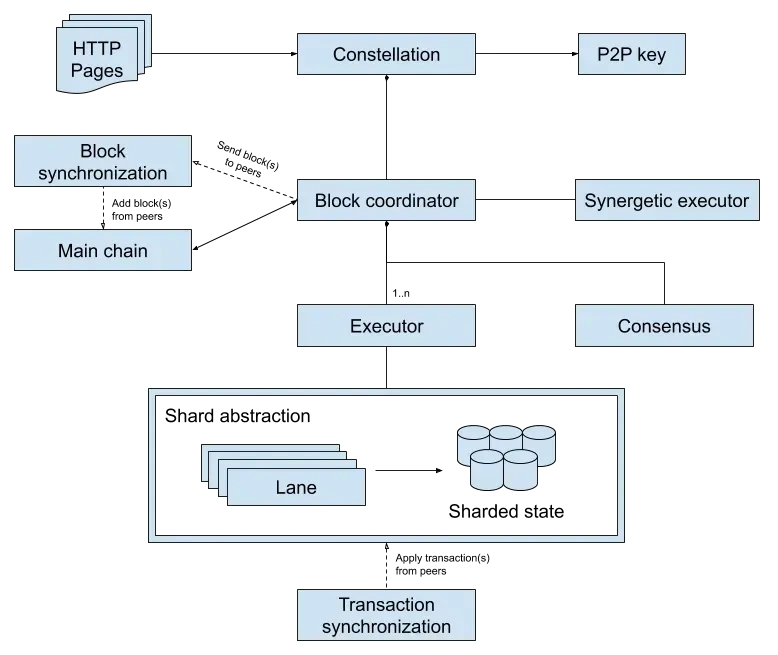

Fetch.ai — agent-based infrastructure for real-time automation

Fetch.ai focuses on autonomous agents and marketplace orchestration for mobility, energy, and IoT workflows. In early 2026 Fetch.ai announced new tooling for AI-assisted developer workflows and agent orchestration, signaling a move from research demos to developer-ready SDKs.

Why it matters: Autonomous economic agents let services trade signals and micro-services in real time. For AI applications that require low-latency negotiation—dynamic pricing, logistics routing—Fetch.ai’s agent framework matters because it combines on-chain settlement with off-chain compute coordination.

What to look for next

• Real-world trials with logistics or energy providers.

• Developer activity around agent marketplaces and SDK adoption.

• Cross-chain messaging for liquidity and settlement.

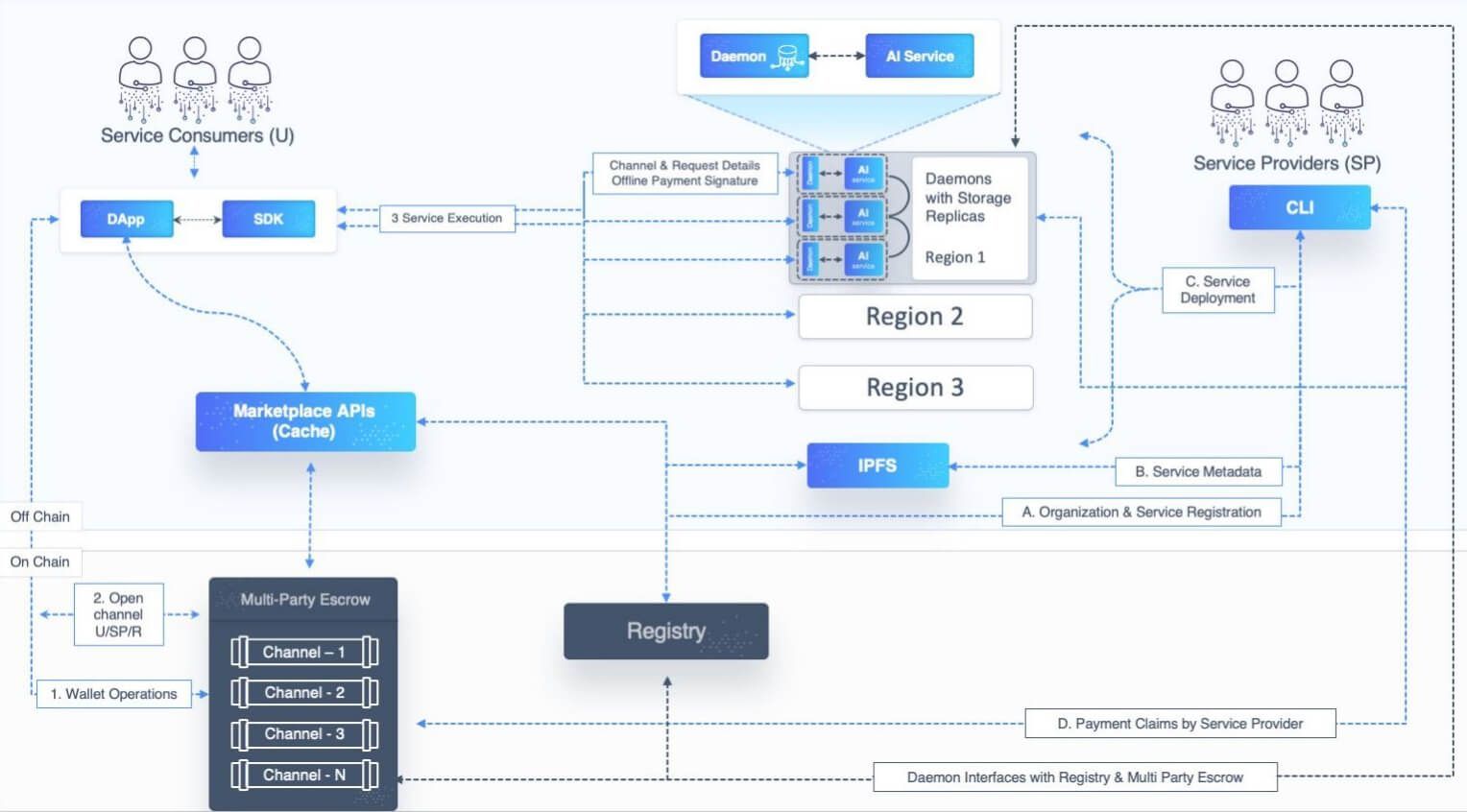

SingularityNET — decentralized model marketplace and governance

SingularityNET has long pitched the idea of a decentralized marketplace for AI models. In 2026 the project continued to build community-facing developer events and workshops to grow the MeTTa programming language and model registry. That activity matters because a real marketplace needs both supply (model creators) and demand (enterprises buying inference or model licensing).

Why it matters: A neutral registry with standardized APIs, on-chain payments, and governance reduces vendor lock-in for enterprises buying model services. SingularityNET’s focus on tooling and coders’ events aims to build the supply side needed for a marketplace to function at scale.

What to look for next

• Number of paid model transactions and total value locked in model subscriptions.

• Audit trails proving model provenance and licensing.

• Enterprise integrations showing B2B traction.

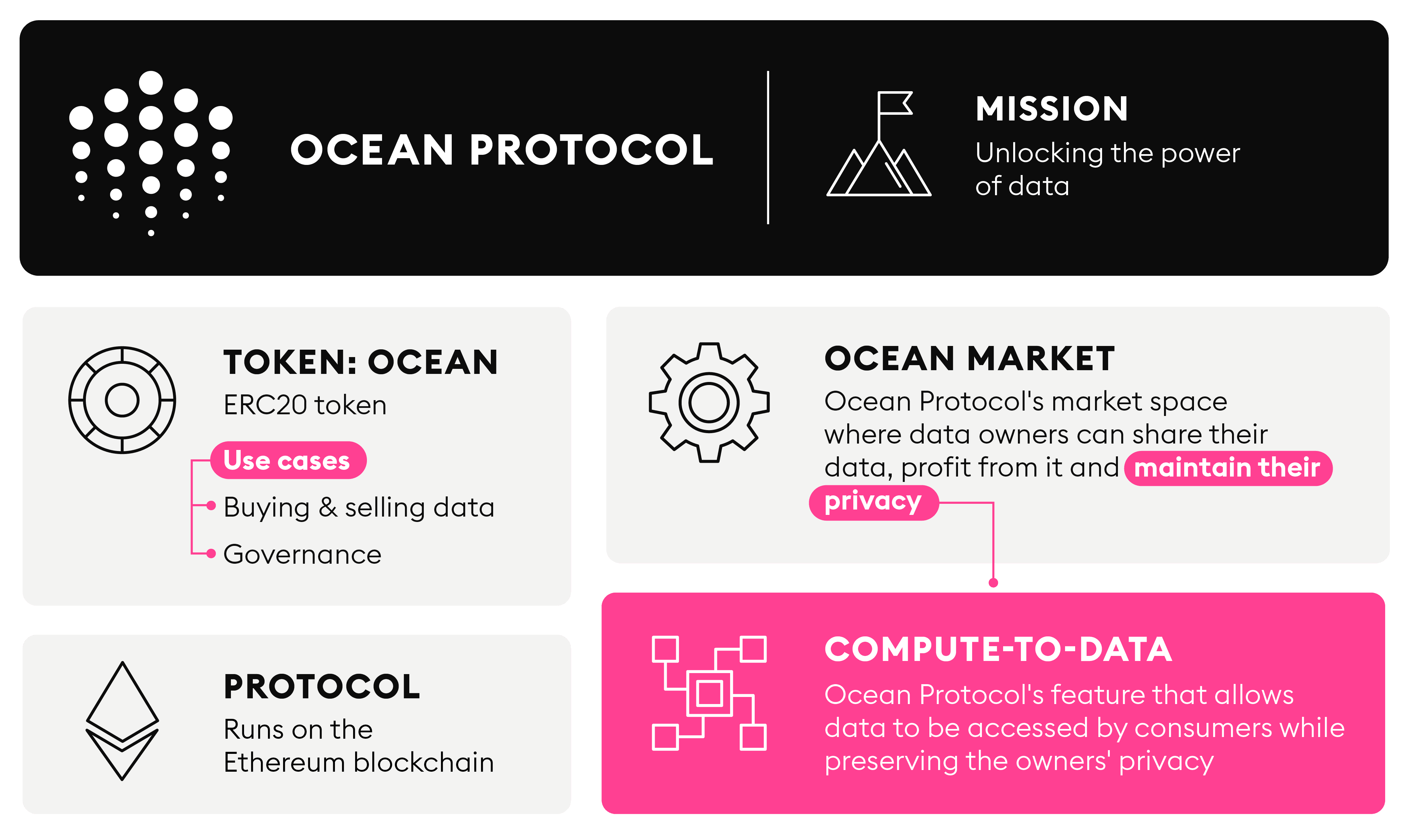

Ocean Protocol — data markets and compute-to-data

Ocean Protocol enables secure data publishing and compute-to-data where buyers run algorithms on private datasets without extracting raw data. Its marketplace is one of the few with clear compute-to-data tooling enabling AI model training on sensitive datasets without exposing them. That design answers a core enterprise barrier: data privacy plus monetization.

Why it matters: AI models starve without curated, compliant data. Ocean’s architecture—tokenized data assets + compute-to-data—lets institutions monetize data while preserving privacy, opening new supply lines for model training and validation that were previously trapped behind legal and operational barriers.

What to look for next

• Volume of paid compute-to-data jobs and repeat buyers.

• Certifications for datasets (e.g., HIPAA, SOC2) and third-party attestations.

• Interoperability with major ML frameworks and data standards.

Emerging winners and speculative plays

Beyond the incumbents, 2026 produced a crop of niche projects that matter if they hit technical milestones: decentralized oracle layers specialized for model checkpoints; tokenized datasets for regulated sectors; ML-specific privacy layers combining MPC and ZK proofs. Watch projects that publish detailed technical roadmaps, independent audits, and—increasingly—cloud partnerships. Industry reporting shows this trend is accelerating as hyperscalers sign deals to expand capacity and enterprises seek hybrid on/off-chain assurances.

Why it matters: Niche infra projects fill specific gaps—provable model lineage, privacy-preserving inference, and verifiable compute. Small teams that solve one critical enterprise pain can become crucial middleware between models and money flows.

How to evaluate a project’s real AI-crypto potential

| Evaluation axis | Why it matters | Red flag |

|---|---|---|

| Technical proofs | Shows system actually works vs marketing | No benchmarks or private demos only |

| Enterprise integrations | Cloud or bank partnerships indicate trust | No integrations after token launch |

| Token economics | Aligns incentives for providers and buyers | Excessive team allocation, short vesting |

| Audits | Reduces smart-contract and oracle risk | No independent audits |

| Developer adoption | Sustained commits and SDK usage | Declining activity after launch |

Why it matters: Projects that score well across these axes combine production readiness with governance and economic sustainability. That mix is what moves a protocol from speculation to utility.

Real metrics that prove adoption

Track monthly paid transactions (not just transfers), active developers, repeat enterprise buyers, and committed GPU hours or data-license revenues. These metrics matter more than token price because they measure real demand and monetization pathways.

Case study snapshot: RNDR vs Ocean vs AGIX

Render’s December 2025 monthly report detailed increasing adoption from creative studios and initial LLM inference jobs, signaling a pivot toward AI workloads. Ocean’s market continues to add datasets and compute-to-data flows, making it attractive for regulated sectors. SingularityNET’s developer workshops aim to increase model supply and standardize APIs for on-chain buying of model services. Together these projects show different but complementary approaches: compute market, data market, and model marketplace.

Why it matters: When compute, data, and models each mature, practical vertical solutions (finance, healthcare, supply chain) can be built rather than one-off demos. The projects above represent those three layers.

Investment framing: risk and return in 2026

AI+crypto is still early-stage. Expect binary outcomes: either a project becomes critical infrastructure or it collapses under tokenomics and security issues. Plan for concentrated bets with strict position sizing. Focus on teams that publish security audits, transparent treasury plans, and real revenue. Projects tied to regulated data or cloud integrations carry premium odds of survival.

Why it matters: The path from product to protocol requires sustained adoption; token-driven speculation alone won’t create durable value. Prioritize project fundamentals and measurable usage over marketing narratives.

What enterprises are actually buying in 2026

Enterprises buy guarantees: SLAs, data provenance, and audit trails. They are less interested in tokens and more in on-chain proofs that enforce contract terms. Successful AI+crypto vendors sell legal-compliant interfaces (custody, attestation, reporting) that bridge corporate risk committees with programmable settlement.

Short checklist for technical due diligence

Ask for on-chain proof-of-service logs, verifier reports for compute and data, token vesting schedules, and multi-sig governance on treasury funds. Verify integrations with at least one major cloud provider or regulated custodian. Prioritize platforms that publish third-party performance tests and clear upgrade paths.

Table: Quick comparison snapshot (RNDR / Ocean / SingularityNET / Fetch.ai)

| Feature | RNDR | Ocean | SingularityNET | Fetch.ai |

|---|---|---|---|---|

| Primary focus | GPU compute marketplace | Data & compute-to-data | Model marketplace & governance | Autonomous agents & orchestration |

| 2026 traction signals | Redshift/Maxon integrations | Marketplace + datatokens | Developer workshops | Agent SDK releases |

| Enterprise appeal | Cost-effective distributed inference | Privacy-compliant data access | Model procurement governance | IoT/logistics automation |

| Biggest dependency | Node reliability & SLAs | Data provenance standards | Model licensing clarity | Agent adoption & standards |

| Citation examples | RNDR monthly reports. | Ocean docs. | AGIX events. | Fetch.ai product posts. |

Why it matters: Use this snapshot as a starting point—measure each project on the five due-diligence axes previously listed. Look for open metrics like paid jobs, not vanity metrics.

Deployment models that win enterprise deals

Hybrid deployments—private datasets in a regulated cloud for training with public settlement for licensing—are what sell. Projects that offer hybrid connectors (trusted execution environments, MPC gateways, compute attestations) win enterprise procurement processes.

Practical signal checklist for traders and allocators

Track weekly paid-job growth, cloud partnership announcements, independent audits, and treasury unlock schedules. When three of these signals align—tech milestone, partnership, and revenue—the investment case shifts from speculative to tactical.

Image suggestions and placement

Place an infographic showing the three-layer stack—Compute (Render), Data (Ocean), Models (SingularityNET)—with arrows showing money flows and SLAs. Add a small table visual summarizing the due-diligence checklist beside it.

Final operational advice

Don’t buy projects because of token hype. Buy access to services you or your clients need. If a protocol sells verifiable compute or licensed data that reduces operational cost or legal friction, its token may capture genuine demand. Treat AI+crypto as infrastructure bets, not lottery tickets.

FAQs

What is the single most reliable signal of product-market fit for AI+crypto projects?

Paid transactions and recurring enterprise buyers are the most reliable signals—token transfers or social buzz are not.

How do I verify a project’s compute claims?

Ask for independent benchmarks, reproducible tests, and cryptographic proof-of-service logs that tie compute results to job receipts.

Are tokens necessary for AI infrastructure value capture?

Not always. Tokens help align incentives, but value can be captured through fees, subscription models, or service-level contracts; tokens matter when they meaningfully reduce coordination friction.

Which sectors will adopt AI+crypto first?

Finance (trade surveillance and tokenized assets), supply chain (provenance + forecasting), and healthcare (privacy-preserving model access) are top adopters due to clear ROI and regulatory frameworks.

How should I size a position in AI+crypto?

Treat these as venture-like bets: small initial allocations, staged increases on verifiable milestones, and strong stop-loss discipline. Favor projects with audited code and enterprise partnerships.

You may also like

What Is RWA (Real-World Asset Tokenization)? The Biggest Opportunities in 2026

What is RWA (real-world asset tokenization)? Learn how tokenized real estate, tokenized bonds, and tokenized gold work, the biggest RWA crypto opportunities in 2026, risks, returns, and how beginners can invest safely in RWA projects.

What is Juno Agent (JUNO) Coin?

Exciting news for crypto enthusiasts: Juno Agent (JUNO) has recently been listed on WEEX! As of February 10,…

JUNO USDT Premieres on WEEX: Juno Agent (JUNO) Coin Debut

WEEX Exchange is thrilled to announce the world premiere listing of Juno Agent (JUNO) Coin, opening up exciting…

What is Blowfish (BLOWFISH) Coin?

Blowfish (BLOWFISH) coin has recently made waves with its debut on the well-regarded WEEX Exchange. This new listing…

WEEX Exclusively Lists Blowfish (BLOWFISH) Coin with BLOWFISH USDT Debut

WEEX Exchange is thrilled to announce the exclusive world premiere listing of Blowfish (BLOWFISH), an innovative AI agent…

What Is the Warden Protocol About? Exploring Its Impact on AI and Web3

The Warden Protocol has been making waves in the crypto space since its launch in early 2026, positioning…

Is XAG Backed by Silver? Unpacking the Facts Behind This Precious Metal Derivative

As we move into 2026, silver prices have been making headlines with a notable uptick. According to CoinMarketCap…

Juno Agent (JUNO) Coin Price Prediction & Forecasts for February 2026 – Potential Rally Amid AI Agent Boom

Juno Agent (JUNO) has been turning heads in the crypto space lately, especially after its recent integration with…

BLOWFISH Coin Price Prediction & Forecast for February 2026: Could This New AI Launchpad Token Surge 50% Post-Listing?

BLOWFISH Coin, the native token of the Blowfish project, just hit the market today on February 10, 2026,…

Warden (WARD) Token Price Prediction & Forecasts for February 2026: Can It Surge Past $0.15 Amid AI Blockchain Growth?

Warden (WARD) Token has been turning heads since its launch in mid-January 2026, riding the wave of interest…

WEEX Staking Bitcoin(ETH): Complete Guide for Beginners

Discover WEEX Staking Bitcoin for passive income in 2026. Learn how crypto staking works, secure daily rewards, and start staking Bitcoin on a trusted exchange. Flexible and fixed options with transparent APR.

What Does XAG Stand For? A Beginner’s Guide to Silver Derivatives in Crypto

As of February 10, 2026, the crypto market continues to blend traditional assets with blockchain innovation, and XAG…

How Much Is 1 XAG in Dollars? Latest Price Update and 2026 Silver Market Forecast

As we move through early 2026, the Silver (Derivatives) token, known by its symbol XAG, continues to draw…

RNBW USDT Perpetual: Halo Rainbow (RNBW) Listed on WEEX

Trade Halo Rainbow (RNBW) Coin and RNBW USDT futures on WEEX Exchange. High-leverage RNBW USDT-M perpetual contracts are now live for global traders.

WEEX Futures Adds WARD USDT: Warden Protocol (WARD) Coin

Trade Warden Protocol (WARD) Coin on WEEX. WARD USDT futures are live with 20x leverage. Explore the latest WARD listing on the secure WEEX Exchange today!

Is XAUT Safe? A Complete Guide to Tether Gold in 2026

Learn if XAUT is safe in 2026. This expert analysis covers Tether Gold's physical reserves, Swiss vault security, and audit transparency. Discover how to trade XAUT with 0 fees and claim rewards during the exclusive WEEX February event.

What is XAUT Coin? A Comprehensive 2026 Guide to Tether Gold

Discover what is XAUT coin and how Tether Gold is transforming the precious metals market in 2026. Learn about physical gold backing, transparency, and how to trade XAUT with 0 fees on WEEX during the latest rewards event.

XAUT Meaning: Why Tether Gold is the Ultimate Digital Hedge in 2026

Discover the XAUT meaning and why Tether Gold (XAUt) is a top-tier digital asset in 2026. Learn how to trade gold futures on WEEX with zero fees and register now to claim your trading bonus and rewards.

What Is RWA (Real-World Asset Tokenization)? The Biggest Opportunities in 2026

What is RWA (real-world asset tokenization)? Learn how tokenized real estate, tokenized bonds, and tokenized gold work, the biggest RWA crypto opportunities in 2026, risks, returns, and how beginners can invest safely in RWA projects.

What is Juno Agent (JUNO) Coin?

Exciting news for crypto enthusiasts: Juno Agent (JUNO) has recently been listed on WEEX! As of February 10,…

JUNO USDT Premieres on WEEX: Juno Agent (JUNO) Coin Debut

WEEX Exchange is thrilled to announce the world premiere listing of Juno Agent (JUNO) Coin, opening up exciting…

What is Blowfish (BLOWFISH) Coin?

Blowfish (BLOWFISH) coin has recently made waves with its debut on the well-regarded WEEX Exchange. This new listing…

WEEX Exclusively Lists Blowfish (BLOWFISH) Coin with BLOWFISH USDT Debut

WEEX Exchange is thrilled to announce the exclusive world premiere listing of Blowfish (BLOWFISH), an innovative AI agent…

What Is the Warden Protocol About? Exploring Its Impact on AI and Web3

The Warden Protocol has been making waves in the crypto space since its launch in early 2026, positioning…